May 20, 2021

Thought-to-Text Interfaces are Just Around the Corner

By: burgundy bug

Brain charging and mental rest

Source: Adobe Stock

While mind-reading interfaces that convert thoughts to text sound like a dystopian sci-fi plot that could go horribly wrong disturbingly fast, the reality may not be so far away — or as fallible, either.

When you first hear “thought to text,” your knee-jerk response might be, “Uhh, no way. What if I have an intrusive thought that I don’t really mean to send? Or an impulse to text someone I know I shouldn’t?” But the most recent brain-computer face interface studies don’t rely on decoding your internal monologue or raw thoughts.

Rather, researchers have programmed a brain-computer interface that decodes “attempted handwriting movements” from motor cortex activity. This technology allows people who have been paralyzed for years to imagine handwriting and translate that into texting at speeds of 90 characters per minute with 94.1 percent accuracy, which is comparable to average smartphone typing speeds.

Furthermore, that accuracy climbs to 99 percent with general-purpose autocorrect.

“Previous BCI [brain-computer interfaces] have shown that the motor intention for gross motor skills, such as reaching, grasping, or moving a computer cursor, remains neurally encoded in the motor cortex after paralysis,” the study begins.

However, it had been previously unknown whether the gross motor skills for more “rapid and highly dexterous” movements — such as handwriting — remained intact.

Putting this theory to the test, researchers instructed study participant “T5” to “‘attempt’ to write as if his hands were not paralyzed while imagining that he was holding a pen on a piece of ruled paper,” the study explains. T5 has a high level of spinal cord injury, which has left him paralyzed from the neck down.

T5’s brain activity was recorded using microelectrodes in the “hand” area of the precentral gyrus, which is in the premotor cortex.

Anatomy of the human brain

Source: Adobe Stock

But first, the researchers had to figure out how the brain processes and executes handwritten tasks.

Encoding Handwriting in the Brain

To get to the bottom of the question, researchers had to decipher how the brain encodes the neural activity needed to draw the shape of each letter. They found the most accurate measure was pen-tip velocity, so they linearly decoded this measure from the average neural activity observed across different trials.

“Readily recognizable letter shapes confirmed that pen-tip velocity is robustly encoded,” the study reports. “The neural dimensions that represented pen-tip velocity accounted for 30 percent of the total neural variance. Next, we used a nonlinear dimensionality reduction method to produce a two-dimensional visualization of each single trial’s neural activity recorded after the ‘go’ cue was given.”

This 2D visualization showed there are tight clusters of neural activity for each character, and those that share similar shapes have clusters of activity that are closer together.

For example, a lowercase “o” and “a” both share a round, circular base — or a lower case “b” and “p” are the same, just flipped horizontally. As a result, the clusters of neural activity that encode how an “o” and “a” are written (or a “b” and “p”) neighbor each other.

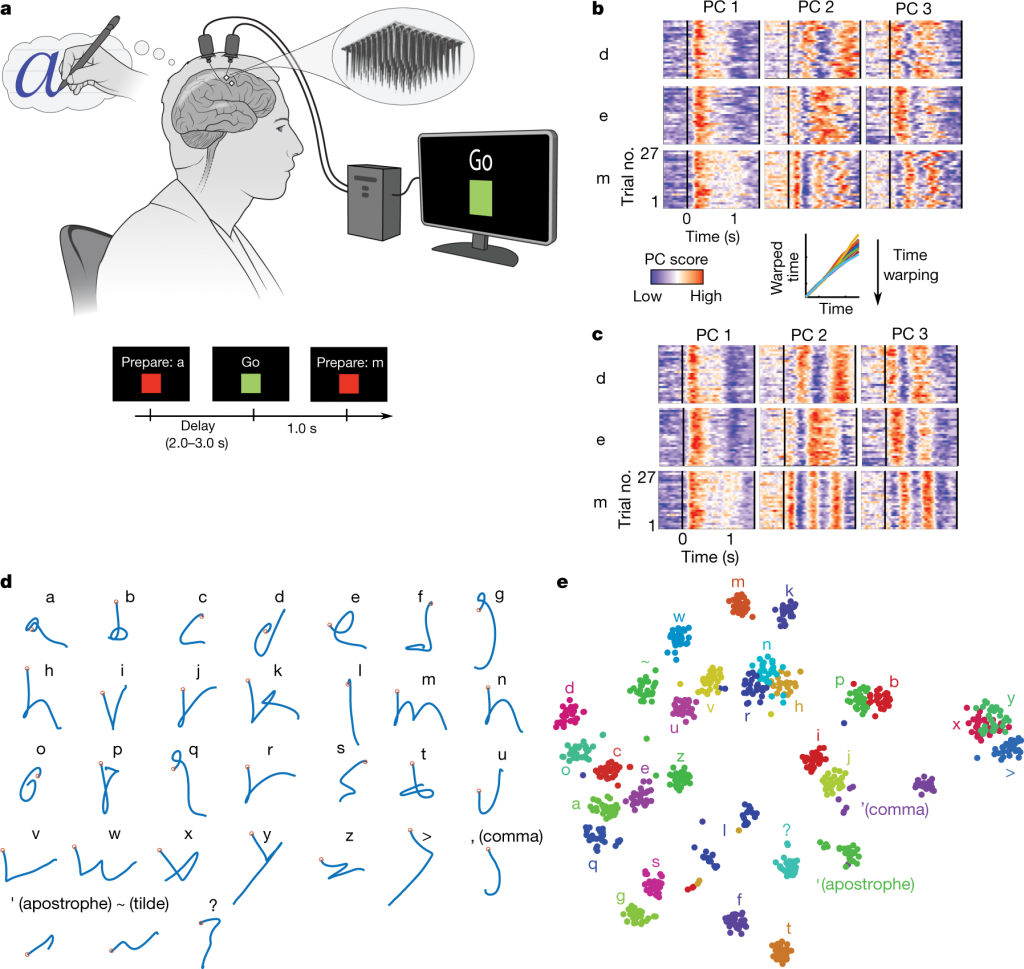

a, To assess the neural representation of attempted handwriting, participant T5 attempted to handwrite each character one at a time, following the instructions given on a computer screen (bottom panels depict what is shown on the screen, following the timeline). Credit: drawing of the human silhouette created by E. Woodrum.

b, Neural activity in the top 3 principal components (PCs) is shown for three example letters (d, e and m) and 27 repetitions of each letter (trials). The colour scale was normalized within each panel separately for visualization.

c, Time-warping the neural activity to remove trial-to-trial changes in writing speed reveals consistent patterns of activity unique to each letter. In the inset above c, example time-warping functions are shown for the letter ‘m’ and lie relatively close to the identity line (the warping function of each trial is plotted with a different coloured line).

d, Decoded pen trajectories are shown for all 31 tested characters. Intended 2D pen-tip velocity was linearly decoded from the neural activity using cross-validation (each character was held out), and decoder output was denoised by averaging across trials. Orange circles denote the start of the trajectory.

e, A 2D visualization of the neural activity made using t-SNE. Each circle is a single trial (27 trials are shown for each of 31 characters).

Source: Fig. 1: Neural representation of attempted handwriting. | High-performance brain-to-text communication via handwriting

Decoding Handwritten Sentences

Once the researchers identified the neural activity for each character, it was time to string everything together to form sentences. They speed up the process of forming sentences by training a recurrent neural network (RNN) algorithm to help predict which character was more likely to appear after the one previously imagined by T5.

T5 copied each sentence from a prompt on the screen, continuing to “attempt” to handwrite each character. His imagined handwriting appeared as typed letters on-screen with a 0.4 to 0.7 second delay.

It’s also worth noting the sentences T5 was instructed to write were ones the RNN had never been trained on. This ensured the algorithm couldn’t make biased predictions as to which characters would come next in the sequence.

“When a language model was used to autocorrect errors offline, error rates decreased considerably,” the study continues. “The character error rate decreased to 0.89 percent and the word error rate decreased to 3.4 percent averaged across all days, which is comparable to state-of-the-art speech recognition systems with word error rates of 4 to 5 percent, putting it well within the range of usability.”

Afterward, they had T5 use the BCI to free-write answers to open-ended questions. This further demonstrated the technology’s application in scenarios with self-generated responses, as opposed to simply copying prompts on the screen.

T5 self-generated sentences at a rate of 73.8 characters per minute, with an error rate of 8.54 percent in real-time. When the language model was applied, the error rate decreased to 2.25 percent.

Handwriting v.s. Point-Click Typing

Previous examples of BCIs have implemented “point-and-click typing,” in which the individual imagines typing by moving the cursor over reach character and mentally clicking. Point-and-click models peak at 40 correct characters per minute, whereas the imagined handwriting method peaks at 90 correct characters per minute.

After comparing the neural activity required to move the cursor v.s. the activity of handwriting, the researchers observed it’s easier for an algorithm to classify individual letters than the movement of lines to control the cursor.

The increase in speed and accuracy of handwritten characters may arise due to the “nearest neighbor variable” of handwritten letters that share similar neural activity in the precentral gyrus, the researchers hypothesize.

“We think this is one — but not necessarily the only — important factor that enabled a handwriting BCI to go faster than continuous-motion point-and-click BCIs,” the study says. “…More generally, using the principle of maximizing the nearest neighbor distance between movements, it should be possible to optimize a set of movements for ease of classification.”

A short video summary of the BCI study described throughout this article

Source: Brain Computer Interface Turns Mental Handwriting into Text on Screen | HHMI Howard Hughes Medical Institute

Imagined Handwriting v.s. Internal Monologue — What’s the Difference?

Before diving down the rabbit hole of how a handwritten BCI differs from “translating thoughts” or “internal monologue,” now’s a good time to mention that not everyone has an internal monologue.

A 2008 Consciousness and Cognition study assessed the “inner experiences” of its participants and found:

- 26 percent experience inner speech or an internal monologue

- 34 percent experience inner seeing or visualization

- 22 percent experience unsymbolized thinking

- 26 percent experience inner feeling

- 22 percent experience sensory awareness

Our internal experiences widely differ from one individual to the next — there’s no “normal” or “one-size-fits-all” approach to thoughts. That’s simply the beauty of the brain.

Just as the premotor cortex activates when imagining handwriting, the respective areas associated with speech, hearing, seeing, emotions, and sensory processing activate during the respective thought processes.

Read: The brain’s conversation with itself: neural substrates of dialogic inner speech

Source: Social Cognitive and Affective Neuroscience

Therefore, creating a BCI that translates raw thoughts would take a great understanding and appreciation of this internal variation. A very large-scale study would likely be needed to bolster the accuracy of converting raw thoughts into text.

Handwriting, on the other hand, is more consistent. While we all have unique handwriting, most Latin characters begin with a downstroke or counter-clockwise curl. The basics of printed letters are more consistent. These rudimentary motor skills are also built upon from early childhood onward, making these patterns quite “hardwired” into the brain.

Furthermore, the process of imagining handwriting seems more deliberate than other internal thought processes. In the case of internal speech, you could be actively thinking about something while a song that’s stuck in your head is looping in the background. Filtering out this “noise” would be more complex than training an algorithm to translate imagined or attempted handwriting.

In Conclusion

A dystopian future in which mind-reading chips are implanted in our brains and accidental impulse texts are sent to our exes is still a long way off from current brain-computer interface technologies.

However, recent advancements in BCIs demonstrate the potential to enable thought-to-text communication for those who live with severe paralysis. Rather than translating raw thoughts, this technology relies on the individual imagining they’re holding a pen and “attempting” to draw each letter.

“Here, we introduced a new approach for communication BCIs — decoding a rapid, dexterous motor behavior in an individual with tetraplegia — that sets a benchmark for communication rate at 90 characters per minute,” the study says. “The real-time system is general (the user can express any sentence), easy to use (entirely self-paced and the eyes are free to move), and accurate enough to be useful in the real world.”

But this study is simply a proof of concept. “It’s not yet a complete, clinically viable system,” the study continues. Further studies are needed to demonstrate functionality among more participants, and additional advancements are needed to expand the character set. The ability to edit and delete previous letters is also needed.

“Nevertheless, we believe that the future of intracortical BCIs is bright,” the study concludes. “Current microelectrode array technology has been shown to retain functionality for more than 1,000 days after implant, and has enabled the highest BCI performance so far compared to other recording technologies for restoring communication, arm control, and general-purpose computer use.”

Subscribe to have Burgundy Zine content sent directly to your email inbox!

Interested in having content featured in an upcoming blog post or issue of The Burgundy Zine? Head on over to the submissions page!

For all other inquiries, please fulfill a contact form.